At the beginning of next year, Clive Holmes will attempt to do something remarkable. But you’d never guess it from meeting this mild-mannered psychiatrist with a hint of a Midlands accent. In fact, you could be sitting in his office in the Memory Assessment and Research Centre at Southampton University and be unaware that he was up to anything out of the ordinary – save for a small whiteboard behind his desk, on which he’s drawn a few amorphous blobs and squiggles. These, he’ll assure you, are components of the immune system.

As a psychiatrist, he’s had little formal training in immunology, but has spent much of his time of late trying to figure how immune cells in the body communicate with others in the brain. These signals into the brain, he thinks, accelerate the speed at which neurons – nerve cells in the brain – are killed in late-stage Alzheimer’s disease and at the beginning of next year he hopes to test the idea that blocking these signals can stop or slow down disease progression.

If he shows any dent on disease progression, he would be the first to do so. Despite the billions of pounds pumped into finding a cure over the last 30 years, there are currently no treatments or prevention strategies.

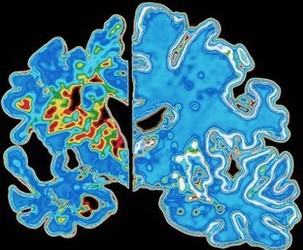

“Drug development has been largely focused on amyloid beta,” says Holmes, referring to the protein deposits that are characteristically seen in the brains of people with Alzheimer’s and are thought to be toxic to neurons, “but we’re seeing that even if you remove amyloid, it seems to make no difference to disease progression.”

He mentions two huge recent failures in drugs that remove amyloid. The plug has been pulled on bapineuzumab by its developers Johnson & Johnson and Pfizer after trials showed its inability to halt disease progression; and the wheels seem to be coming off Eli Lilly’s drug solanezumab after similarly disappointing results.

Other drugs in the experimental pipeline are a long way off. Few make the jump from efficacy in animals to efficacy in people. Fewer still prove safe enough to be used widely.

Holmes’s theory, if true, would have none of these problems. He’s been testing etanercept, a drug widely used for rheumatoid arthritis. It blocks the production of TNF-alpha, one of the signalling molecules, or cytokines, used by immune cells to communicate with each other. In the next few months, he expects the results of a pilot trial in people with Alzheimer’s. If they are positive, he’ll test the strategy in people with only the mildest early forms of the disease.

“If we can show that this approach works,” says Holmes, leaning forward in his chair, “then since we already know a hell of a lot about the pharmacology of these drugs, I’m naive enough to think that they could be made available for people with the disease or in the early stages of the disease and we can move very quickly into clinical application.”

It seems too good to be true. Why, then, has the multibillion-pound drug industry not at least tested this theory? There are roughly 35 million people in the world with this devastating, memory-robbing disease, and with an increasingly ageing population, this number is expected to rise to 115 million by 2050. It’s not as if there is no financial incentive.

The answer might be because the idea that Holmes and his colleagues are testing took an unconventional route to get to this point. It started life as an evolutionary theory about something few people have even considered – why we feel ill when we are unwell. The year was 1980. Benjamin Hart, a vet and behavioural scientist at the University of California, Davis had been grappling with how animals in the wild do so well without veterinary interventions such as immunisations or antibiotics. He’d attended a lecture on the benefits of fever and how it suppresses bacterial growth. But fever is an energy-consuming process; the body has to spend 13% more energy for every 1°C rise in body temperature.

“So I started thinking about how animals act when they’re sick,” says Hart. “They get depressed, they lose their appetite. But if fever is so important, what they need is more food to fuel the fever. It didn’t stack up.”

After a few years of mulling this over, Hart published a paper on what he termed “sickness behaviour” in 1985. Lethargy, depression and loss of appetite were not, as people thought, a consequence of infection, but a programmed, normal response to infection that conferred a clear survival advantage in the wild. If an animal moves around less when ill it is less likely to pick up another infection; if it eats or drinks less, it is less likely to ingest another toxin.

“The body goes into a do-or-die, make-or-break mode,” says Hart. “In the wild, an animal can afford not to eat for a while if it means avoiding death. It allows the immune system to get going. You do this – and this is the important bit – before you’re debilitated, when it’s still going to do you some good.”

The next crucial step came from across the Atlantic in Bordeaux. In a series of experiments published between 1988 and 1994, Robert Dantzer, a vet turned biologist, showed three things. First, that inflammatory cytokines in the blood, even in the absence of infection, were enough to bring about sickness behaviour. Second, that these cytokines, produced by immune cells called macrophages, a type of white-blood cell, signal along sensory nerves to inform the brain of an infection. And third, that this signal is relayed to microglial cells, the brain’s resident macrophages, which in turn secrete further cytokines that bring about sickness behaviours: lethargy, depression and loss of appetite.

Dantzer, who has since moved to the University of Texas, had thus dispelled one of the biggest dogmas in neurology – that emotions and behaviours always stemmed from the activity of neurons and neurotransmitters. He showed that in times of trouble, the immune system seizes control of the brain to use behaviour and emotions as an extension of the immune system and to ensure the full participation of the body in fighting infection.

In late April 1996, he set up a meeting in Saint-Jean-de-Luz, on the Bay of Biscay in the southwest of France, to gather together researchers interested in cytokines in the brain. It was there, in a chance meeting over breakfast in the hotel lobby, that he met Hugh Perry, a neuroscientist from Oxford University.

Perry is now at Southampton University. Sitting in his office, surrounded by framed pictures of neurons, his eyes light up as he recounts that meeting with Dantzer. “I remember as he told me about this idea of sickness behaviour thinking, ‘Wait a minute, this is a really interesting idea’. And then when he said that the innate immune cells in the brain must be involved in the process, a bell rang.” He puts down his coffee and rings an imaginary bell above his head. “Ding, ding, ding – so what if instead of a normal brain, it was a diseased brain. What happens then?”

Perry had just started working on a model of neurodegenerative disease in mice. He was studying prion disease, a degenerative disease in the brain, to see how the brain’s immune system responds to the death of neurons. He had switched laboratories from Oxford to Southampton the year after meeting Dantzer and the idea had travelled with him.

He injected his mice with a bacterial extract to induce sickness behaviour, and instead of a normal sickness behaviour response, he saw something extraordinary. His mice with brain disease did substantially worse than those with otherwise healthy brains. They were very susceptible to the inflammation and had an exaggerated sickness behaviour response. They stayed worse even though his healthy mice got better.

This, Perry explains, is because one of the microglial cells’ many roles is to patrol the brain and scavenge any debris or damaged cells, such as the misfolded proteins in prion disease. “When there’s ongoing brain disease, the microglial cells increase in number and become what’s called primed,” he says, referring to the procedure by which the immune system learns how to deal better and more efficiently with harmful stimuli. “When primed, they make a bigger, more aggressive response to a secondary stimulus.”

When the signal of peripheral infection came into the diseased brains of the mice, their microglial cells, already primed, switched into this aggressive phase. They began, as they usually would, to secrete cytokines to modulate behaviour, but secreted them at such high concentrations that they were toxic, leading to the death of neurons.

“But you never really know how your findings will translate to humans,” Perry adds. “So all this mouse stuff could be great, but is there any real importance to it? Does it matter? And that’s when I called Clive.”

Perry hadn’t met Clive Holmes at that point, but had heard of him through Southampton University’s Neuroscience Group, which brings basic researchers and clinicians together. He’d called him in 2001 to ask whether people with Alzheimer’s disease got worse after an infection. The answer was a categorical yes.

“And then Perry asked me if there was evidence to show this,” remembers Holmes. “And I was sure there must be. It was so clinically obvious. Everybody who works with Alzheimer’s just knows it. But when I looked into it, there was no evidence – nobody had really looked at it.”

In several ensuing studies, Holmes and Perry have since provided that evidence. Patients with Alzheimer’s do indeed do worse, cognitively, after an infection. But it’s not only after an infection. Chronic inflammatory conditions such as rheumatoid arthritis or diabetes, which many elderly patients have, and which also lead to the production of inflammatory cytokines, also seem to play an important part.

Holmes and Perry speculate that it’s the presence of the characteristic amyloid beta deposits in the brains of these individuals that primes the microglial cells. And that when signals of inflammation come in, be it from an infection or low-grade chronic inflammatory condition, the microglial cells switch into their aggressive, neuron-killing mode. This, they think, is why removal of amyloid beta might not be working: the damage, or in this case the priming of the microglial cells, might have already been done, meaning that the killing of neurons will continue unabated.

“So next year if these initial results look promising,” says Holmes, “we’re hoping to try and block TNF-alpha in people with the early stages of Alzheimer’s to block this peripheral signal before the disease fully takes hold. We want to see what kind of a dent on disease progression we can get. I don’t know what that’s going to be, but that’s what we’re going to find out.”

If all goes according to plan, and he can secure funding to start the trial at the beginning of next year, Holmes will, by mid-2017, find out for sure whether he can stop the disease taking hold. But the results of his trial in people with late-stage disease, due in the next few months, will give him a strong indication of what to expect.